Tutorial 1: A basic introduction to pysumma functionality¶

This notebook gives a brief overview of pysumma functions.

It was originally created by Bart Nijssen and Andrew Bennett (University of Washington) for the “CUAHSI Virtual University snow modeling course 2019”. Modifications include a change of data and update of the naming scheme to comply with SUMMA v3.0. Source: https://github.com/bartnijssen/cuahsi_vu_2019

Loading the required modules¶

[1]:

%pylab inline

%load_ext autoreload

%autoreload 2

%reload_ext autoreload

import pysumma as ps

import pysumma.plotting as psp

import matplotlib.pyplot as plt

!cd data/reynolds && ./install_local_setup.sh && cd -

/home/bzq/workspace/pysumma/tutorial

Instantiating a simulation object¶

To set up a Simulation object you must supply 2 pieces of information.

Simulation object you can then just pass these to the constructor as shown below.[2]:

# Define location of .exe and file manager

executable = 'summa.exe'

file_manager = './data/reynolds/file_manager.txt'

[3]:

# Create a model instance

s = ps.Simulation(executable, file_manager)

Manipulating the configuration of the simulation object¶

Most of your interactions with pysumma will be facilitated through this Simulation object, so let’s take some time to look through what is in it. What’s contained in the Simulation object right after instantiation is generally just the input required for a SUMMA run. For a more in depth discussion of what these are see the SUMMA Input page of the documentation. There are several attributes of interest that can be

examined. To see each of them you can simply print them. Here’s a very high level overview of what’s available:

s.manager- the file managers.decisions- the decisions files.output_control- defines what variables to write outs.force_file_list- a listing of all of the forcing files to uses.local_attributes- describes GRU/HRU attributes (lat, lon, elevation, etc)s.global_hru_params- listing of spatially constant local (HRU) parameter valuess.global_gru_params- listing of spatially constant basin (GRU) parameter valuess.trial_params- spatially distributed parameter values (will overwritelocal_param_infovalues, can be either HRU or GRU)

Most of these objects have a similar interface defined, with exceptions being local_attributes and parameter_trial. Those two are standard xarray datasets. All others follow the simple API:

print(x) # Show the data as SUMMA reads it

x.get_option(NAME) # Get an option

x.set_option(NAME, VALUE) # Change an option

x.remove_option(NAME) # Remove an option

More intuitively, you can use key - value type indexing like dictionaries, dataframes, and datasets:

print(x['key']) # Get an option

x['key'] = value # Change an option

File manager¶

Let’s take a look at what the file manager text file actually contains:

[4]:

s.manager

[4]:

controlVersion 'SUMMA_FILE_MANAGER_V3.0.0'

simStartTime '1999-10-01 01:00'

simEndTime '2002-09-30 23:00'

tmZoneInfo 'localTime'

settingsPath '/home/bzq/workspace/pysumma/tutorial/data/reynolds/settings/'

forcingPath '/home/bzq/workspace/pysumma/tutorial/data/reynolds/forcing/'

outputPath '/home/bzq/workspace/pysumma/tutorial/data/reynolds/output/'

decisionsFile 'snow_zDecisions.txt'

outputControlFile 'snow_zOutputControl.txt'

globalHruParamFile 'snow_zLocalParamInfo.txt'

globalGruParamFile 'snow_zBasinParamInfo.txt'

attributeFile 'snow_zLocalAttributes.nc'

trialParamFile 'snow_zParamTrial.nc'

forcingListFile 'forcing_file_list.txt'

initConditionFile 'snow_zInitCond.nc'

outFilePrefix 'reynolds'

vegTableFile 'VEGPARM.TBL'

soilTableFile 'SOILPARM.TBL'

generalTableFile 'GENPARM.TBL'

noahmpTableFile 'MPTABLE.TBL'These are the file paths and names as the simulation object s knows them. You can compare this to the contents of the file ./pbhm-exercise-2/settings/reynolds/summa_fileManager_reynoldsConstantDecayRate.txt to see that they are identical. For this exercise, we’ll focus on three items: the model decisions or parametrizations, parameter values and output control.

Setting decisions¶

So, now that we’ve got a handle on what’s available and what you can do with it, let’s actually try some of this out. First let’s just print out our decisions file so we can see what’s in the defaults. Note that this only prints the decisions specified in the decision file (./pbhm-exercise-2/settings/reynolds/summa_zDecisions_reynoldsConstantDecayRate.txt) and not all the model decisions that can be made in SUMMA. For a full overview of modelling decision, see the SUMMA documentation:

https://summa.readthedocs.io/en/master/input_output/SUMMA_input/#infile_model_decisions

[5]:

s.decisions

[5]:

soilCatTbl ROSETTA ! soil-category dataset

vegeParTbl USGS ! vegetation-category dataset

soilStress NoahType ! choice of function for the soil moisture control on stomatal resistance

stomResist BallBerry ! choice of function for stomatal resistance

num_method itertive ! choice of numerical method

fDerivMeth analytic ! choice of method to calculate flux derivatives

LAI_method monTable ! choice of method to determine LAI and SAI

f_Richards mixdform ! form of Richards equation

groundwatr qTopmodl ! choice of groundwater parameterization

hc_profile pow_prof ! choice of hydraulic conductivity profile

bcUpprTdyn nrg_flux ! type of upper boundary condition for thermodynamics

bcLowrTdyn zeroFlux ! type of lower boundary condition for thermodynamics

bcUpprSoiH liq_flux ! type of upper boundary condition for soil hydrology

bcLowrSoiH zeroFlux ! type of lower boundary condition for soil hydrology

veg_traits CM_QJRMS1988 ! choice of parameterization for vegetation roughness length and displacement height

rootProfil powerLaw ! choice of parameterization for the rooting profile

canopyEmis difTrans ! choice of parameterization for canopy emissivity

snowIncept lightSnow ! choice of parameterization for snow interception

windPrfile logBelowCanopy ! choice of canopy wind profile

astability louisinv ! choice of stability function

canopySrad BeersLaw ! choice of method for canopy shortwave radiation

alb_method varDecay ! choice of albedo representation

compaction anderson ! choice of compaction routine

snowLayers CLM_2010 ! choice of method to combine and sub-divide snow layers

thCondSnow jrdn1991 ! choice of thermal conductivity representation for snow

thCondSoil funcSoilWet ! choice of thermal conductivity representation for soil

spatial_gw localColumn ! choice of method for spatial representation of groundwater

subRouting timeDlay ! choice of method for sub-grid routing

snowDenNew constDens ! choice of method for new snow densityGreat, we can see what’s in there. But to be able to change anything we need to know the available options for each decision. Let’s look at how to do that. For arbitrary reasons we will look at the snowIncept option, which describes the parameterization for snow interception in the canopy. First we will get it from the decisions object directly, and then query what it can be changed to, then finally change the value to something else.

[6]:

# Get just the `snowIncept` option

print(s.decisions['snowIncept'])

# Look at what we can set it to

print(s.decisions['snowIncept'].available_options)

# Change the value

s.decisions['snowIncept'] = 'stickySnow'

print(s.decisions['snowIncept'])

snowIncept lightSnow ! choice of parameterization for snow interception

['stickySnow', 'lightSnow']

snowIncept stickySnow ! choice of parameterization for snow interception

Changing parameters¶

Much like the decisions we can manipulate the gloabl_hru_param file. First, let’s look at what’s contained in it:

[7]:

print(s.global_hru_params)

upperBoundHead | -7.5d-1 | -1.0d+2 | -1.0d-2

lowerBoundHead | 0.0000 | -1.0d+2 | -1.0d-2

upperBoundTheta | 0.2004 | 0.1020 | 0.3680

lowerBoundTheta | 0.1100 | 0.1020 | 0.3680

upperBoundTemp | 272.1600 | 270.1600 | 280.1600

lowerBoundTemp | 274.1600 | 270.1600 | 280.1600

tempCritRain | 273.1600 | 272.1600 | 274.1600

tempRangeTimestep | 2.0000 | 0.5000 | 5.0000

frozenPrecipMultip | 1.0000 | 0.5000 | 1.5000

snowfrz_scale | 50.0000 | 10.0000 | 1000.0000

fixedThermalCond_snow | 0.3500 | 0.1000 | 1.0000

albedoMax | 0.8400 | 0.7000 | 0.9500

albedoMinWinter | 0.5500 | 0.6000 | 1.0000

albedoMinSpring | 0.5500 | 0.3000 | 1.0000

albedoMaxVisible | 0.9500 | 0.7000 | 0.9500

albedoMinVisible | 0.7500 | 0.5000 | 0.7500

albedoMaxNearIR | 0.6500 | 0.5000 | 0.7500

albedoMinNearIR | 0.3000 | 0.1500 | 0.4500

albedoDecayRate | 1.0d+6 | 1.0d+5 | 5.0d+6

albedoSootLoad | 0.3000 | 0.1000 | 0.5000

albedoRefresh | 1.0000 | 1.0000 | 10.0000

radExt_snow | 20.0000 | 20.0000 | 20.0000

Frad_direct | 0.7000 | 0.0000 | 1.0000

Frad_vis | 0.5000 | 0.0000 | 1.0000

directScale | 0.0900 | 0.0000 | 0.5000

newSnowDenMin | 100.0000 | 50.0000 | 100.0000

newSnowDenMult | 100.0000 | 25.0000 | 75.0000

newSnowDenScal | 5.0000 | 1.0000 | 5.0000

constSnowDen | 100.0000 | 50.0000 | 250.0000

newSnowDenAdd | 109.0000 | 80.0000 | 120.0000

newSnowDenMultTemp | 6.0000 | 1.0000 | 12.0000

newSnowDenMultWind | 26.0000 | 16.0000 | 36.0000

newSnowDenMultAnd | 1.0000 | 1.0000 | 3.0000

newSnowDenBase | 0.0000 | 0.0000 | 0.0000

densScalGrowth | 0.0460 | 0.0230 | 0.0920

tempScalGrowth | 0.0400 | 0.0200 | 0.0600

grainGrowthRate | 2.7d-6 | 1.0d-6 | 5.0d-6

densScalOvrbdn | 0.0230 | 0.0115 | 0.0460

tempScalOvrbdn | 0.0800 | 0.6000 | 1.0000

baseViscosity | 9.0d+5 | 5.0d+5 | 1.5d+6

Fcapil | 0.0600 | 0.0100 | 0.1000

k_snow | 0.0150 | 0.0050 | 0.0500

mw_exp | 3.0000 | 1.0000 | 5.0000

z0Snow | 0.0010 | 0.0010 | 10.0000

z0Soil | 0.0100 | 0.0010 | 10.0000

z0Canopy | 0.1000 | 0.0010 | 10.0000

zpdFraction | 0.6500 | 0.5000 | 0.8500

critRichNumber | 0.2000 | 0.1000 | 1.0000

Louis79_bparam | 9.4000 | 9.2000 | 9.6000

Louis79_cStar | 5.3000 | 5.1000 | 5.5000

Mahrt87_eScale | 1.0000 | 0.5000 | 2.0000

leafExchangeCoeff | 0.0100 | 0.0010 | 0.1000

windReductionParam | 0.2800 | 0.0000 | 1.0000

soil_dens_intr | 2700.0000 | 500.0000 | 4000.0000

thCond_soil | 5.5000 | 2.9000 | 8.4000

frac_sand | 0.1600 | 0.0000 | 1.0000

frac_silt | 0.2800 | 0.0000 | 1.0000

frac_clay | 0.5600 | 0.0000 | 1.0000

fieldCapacity | 0.2000 | 0.0000 | 1.0000

theta_mp | 0.4010 | 0.3000 | 0.6000

theta_sat | 0.5500 | 0.3000 | 0.6000

theta_res | 0.1390 | 0.0010 | 0.1000

vGn_alpha | -8.4d-1 | -1.0d+0 | -1.0d-2

vGn_n | 1.3000 | 1.0000 | 3.0000

mpExp | 5.0000 | 1.0000 | 10.0000

wettingFrontSuction | 0.3000 | 0.1000 | 1.5000

k_soil | 7.5d-6 | 1.0d-7 | 1.0d-5

k_macropore | 0.0010 | 1.0d-7 | 1.0d-5

kAnisotropic | 1.0000 | 0.0001 | 10.0000

zScale_TOPMODEL | 2.5000 | 0.1000 | 100.0000

compactedDepth | 1.0000 | 0.0000 | 1.0000

aquiferScaleFactor | 0.3500 | 0.1000 | 100.0000

aquiferBaseflowExp | 2.0000 | 1.0000 | 10.0000

aquiferBaseflowRate | 2.0000 | 1.0000 | 10.0000

qSurfScale | 50.0000 | 1.0000 | 100.0000

specificYield | 0.2000 | 0.1000 | 0.3000

specificStorage | 1.0d-9 | 1.0d-5 | 1.0d-7

f_impede | 2.0000 | 1.0000 | 10.0000

soilIceScale | 0.1300 | 0.0001 | 1.0000

soilIceCV | 0.4500 | 0.1000 | 5.0000

Kc25 | 296.0770 | 296.0770 | 296.0770

Ko25 | 0.2961 | 0.2961 | 0.2961

Kc_qFac | 2.1000 | 2.1000 | 2.1000

Ko_qFac | 1.2000 | 1.2000 | 1.2000

kc_Ha | 7.9d+4 | 7.9d+4 | 7.9d+4

ko_Ha | 3.6d+4 | 3.6d+4 | 3.6d+4

vcmax25_canopyTop | 40.0000 | 20.0000 | 100.0000

vcmax_qFac | 2.4000 | 2.4000 | 2.4000

vcmax_Ha | 6.5d+4 | 6.5d+4 | 6.5d+4

vcmax_Hd | 2.2d+5 | 1.5d+5 | 1.5d+5

vcmax_Sv | 710.0000 | 485.0000 | 485.0000

vcmax_Kn | 0.6000 | 0.0000 | 1.2000

jmax25_scale | 2.0000 | 2.0000 | 2.0000

jmax_Ha | 4.4d+4 | 4.4d+4 | 4.4d+4

jmax_Hd | 1.5d+5 | 1.5d+5 | 1.5d+5

jmax_Sv | 495.0000 | 495.0000 | 495.0000

fractionJ | 0.1500 | 0.1500 | 0.1500

quantamYield | 0.0500 | 0.0500 | 0.0500

vpScaleFactor | 1500.0000 | 1500.0000 | 1500.0000

cond2photo_slope | 9.0000 | 1.0000 | 10.0000

minStomatalConductance | 2000.0000 | 2000.0000 | 2000.0000

winterSAI | 1.0000 | 0.0100 | 3.0000

summerLAI | 3.0000 | 0.0100 | 10.0000

rootScaleFactor1 | 2.0000 | 1.0000 | 10.0000

rootScaleFactor2 | 5.0000 | 1.0000 | 10.0000

rootingDepth | 3.0000 | 0.0100 | 10.0000

rootDistExp | 1.0000 | 0.0100 | 1.0000

plantWiltPsi | -1.5d+2 | -5.0d+2 | 0.0000

soilStressParam | 5.8000 | 4.3600 | 6.3700

critSoilWilting | 0.0750 | 0.0000 | 1.0000

critSoilTranspire | 0.1750 | 0.0000 | 1.0000

critAquiferTranspire | 0.2000 | 0.1000 | 10.0000

minStomatalResistance | 50.0000 | 10.0000 | 200.0000

leafDimension | 0.0400 | 0.0100 | 0.1000

heightCanopyTop | 20.0000 | 0.0500 | 100.0000

heightCanopyBottom | 2.0000 | 0.0000 | 5.0000

specificHeatVeg | 874.0000 | 500.0000 | 1500.0000

maxMassVegetation | 25.0000 | 1.0000 | 50.0000

throughfallScaleSnow | 0.5000 | 0.1000 | 0.9000

throughfallScaleRain | 0.5000 | 0.1000 | 0.9000

refInterceptCapSnow | 6.6000 | 1.0000 | 10.0000

refInterceptCapRain | 1.0000 | 0.0100 | 1.0000

snowUnloadingCoeff | 0.0000 | 0.0000 | 1.5d-6

canopyDrainageCoeff | 0.0050 | 0.0010 | 0.0100

ratioDrip2Unloading | 0.4000 | 0.0000 | 1.0000

canopyWettingFactor | 0.7000 | 0.0000 | 1.0000

canopyWettingExp | 1.0000 | 0.0000 | 1.0000

minwind | 0.1000 | 0.0010 | 1.0000

minstep | 1.0000 | 1.0000 | 1800.0000

maxstep | 3600.0000 | 60.0000 | 1800.0000

wimplicit | 0.0000 | 0.0000 | 1.0000

maxiter | 100.0000 | 1.0000 | 100.0000

relConvTol_liquid | 0.0010 | 1.0d-5 | 0.1000

absConvTol_liquid | 1.0d-5 | 1.0d-8 | 0.0010

relConvTol_matric | 1.0d-6 | 1.0d-5 | 0.1000

absConvTol_matric | 1.0d-6 | 1.0d-8 | 0.0010

relConvTol_energy | 0.0100 | 1.0d-5 | 0.1000

absConvTol_energy | 1.0000 | 0.0100 | 10.0000

relConvTol_aquifr | 1.0000 | 0.0100 | 10.0000

absConvTol_aquifr | 1.0d-5 | 1.0d-5 | 0.1000

zmin | 0.0100 | 0.0050 | 0.1000

zmax | 0.0750 | 0.0100 | 0.5000

zminLayer1 | 0.0075 | 0.0075 | 0.0075

zminLayer2 | 0.0100 | 0.0100 | 0.0100

zminLayer3 | 0.0500 | 0.0500 | 0.0500

zminLayer4 | 0.1000 | 0.1000 | 0.1000

zminLayer5 | 0.2500 | 0.2500 | 0.2500

zmaxLayer1_lower | 0.0500 | 0.0500 | 0.0500

zmaxLayer2_lower | 0.2000 | 0.2000 | 0.2000

zmaxLayer3_lower | 0.5000 | 0.5000 | 0.5000

zmaxLayer4_lower | 1.0000 | 1.0000 | 1.0000

zmaxLayer1_upper | 0.0300 | 0.0300 | 0.0300

zmaxLayer2_upper | 0.1500 | 0.1500 | 0.1500

zmaxLayer3_upper | 0.3000 | 0.3000 | 0.3000

zmaxLayer4_upper | 0.7500 | 0.7500 | 0.7500

minTempUnloading | 270.1600 | 260.1600 | 273.1600

minWindUnloading | 0.0000 | 0.0000 | 10.0000

rateTempUnloading | 1.9d+5 | 1.0d+5 | 3.0d+5

rateWindUnloading | 1.6d+5 | 1.0d+5 | 3.0d+5

Yikes, that’s pretty long. Let’s change something for the sake of it:

[8]:

# Print it

print('old: ' + str(s.global_hru_params['albedoMax']))

# Change the value

s.global_hru_params['albedoMax'] = 0.9

print('new: ' + str(s.global_hru_params['albedoMax']))

old: albedoMax | 0.8400 | 0.7000 | 0.9500

new: albedoMax | 0.9000 | 0.9000 | 0.9000

Modifying output¶

And one more, we can also modify what get’s written to output. The output control file represents the options available through columns of numeric values. These numbers represent how to write the output. From the SUMMA documentation (https://summa.readthedocs.io/en/latest/input_output/SUMMA_input/#output-control-file) they are arranged as:

! varName | outFreq | inst | sum | mean | var | min | max | mode

As before, let’s look at what’s in the output_control by simply printing it out:

[9]:

print(s.output_control)

nSnow | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarSWE | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarSnowDepth | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarSnowfallTemp | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarSnowAge | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarInfiltration | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarExfiltration | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarTotalSoilLiq | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarTotalSoilIce | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarSurfaceRunoff | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarSurfaceTemp | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarSnowSublimation | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarLatHeatTotal | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarSenHeatTotal | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarSnowDrainage | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarRainfall | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

scalarSnowfall | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

mLayerVolFracIce | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

mLayerVolFracLiq | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

mLayerVolFracWat | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

mLayerDepth | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

mLayerTemp | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

mLayerHeight | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

iLayerHeight | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

iLayerNrgFlux | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

mLayerNrgFlux | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

mLayerLiqFluxSnow | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

iLayerLiqFluxSnow | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

iLayerConductiveFlux | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

iLayerAdvectiveFlux | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

Note that SUMMA is pretty flexible in its output. What we see above is a pretty typical output file configuration that contains most of the major state variables and fluxes. For a more complete overview of what you can ask SUMMA to output, see: https://github.com/NCAR/summa/blob/master/build/source/dshare/var_lookup.f90

We can modify values in the existing output_control in a couple of ways:

[10]:

# Check the settings for one of the output variables

print(s.output_control['scalarInfiltration'])

print(s.output_control['scalarInfiltration'].statistic)

# Change the output statistic from instantaneous to sum

s.output_control['scalarInfiltration'] = [1, 1, 0, 0, 0, 0, 0, 0]

print(s.output_control['scalarInfiltration'])

print(s.output_control['scalarInfiltration'].statistic)

# We could also be more verbose:

s.output_control['scalarInfiltration'] = {

'period': 1, 'instant': 1, 'sum': 0,

'mean': 0, 'variance': 0, 'min': 0, 'max': 0

}

print(s.output_control['scalarInfiltration'])

print(s.output_control['scalarInfiltration'].statistic)

scalarInfiltration | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

instant

scalarInfiltration | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0

sum

scalarInfiltration | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0

instant

Running pysumma and manipulating output¶

Now that you’ve had an overview of how you can interact with SUMMA configurations through pysumma let’s run a simulation. Before doing so we will reset our Simulation object, which will discard all of the changes we’ve made and load in a clean setup. Alternatively you could simply instantiate a new Simulation object. After running the simulation, we will make sure that it completed successfully by checking the status. With a complete run, we can look at the output simply by using the

simulation’s output attribute. It is simply an xarray dataset, which can be manipulated in all of the usual ways.

[11]:

s.reset()

# Or you could just create a new simulation object like before:

#s = ps.Simulation(executable, file_manager)

Before we run the model, we need to ensure that the output directory specified in the fileManger actually exists. If it doesn’t, SUMMA will notify you of this and abort the simulation.

[12]:

# module to handle tasks related to files and folders

import os

# Make the output directory if it doesn't exist

print('Current specified output directory: ' + s.manager['outputPath'].value)

if not os.path.exists(s.manager['outputPath'].value):

os.makedirs(s.manager['outputPath'].value)

Current specified output directory: /home/bzq/workspace/pysumma/tutorial/data/reynolds/output/

Now we can try a model run.

[13]:

s.run('local', run_suffix='_default') # run_suffix allows you to specify a string that will be added to the output file name. This is very useful to keep track of different experiments

print(s.status)

Success

You should have gotten a 'Success' printed out after running the simulation. For further details about the simulation you can look at the full output log that SUMMA produces by printing out s.stdout. In the event that s.status does not return Success you may wish to inspect this log to diagnose the problem further. s.stderror and s.stdout can provide details about the error.

Plotting¶

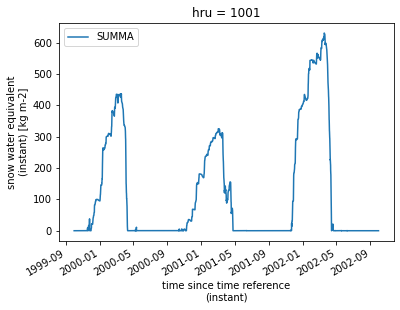

Now that we’ve got some output we can plot some results. Because the output is an xarray DataSet we can use the convenient plotting capabilities provided by xarray.

[14]:

s.output['scalarSWE'].plot(label='SUMMA');

plt.legend(); # note that 'plt' relies on: 'import matplotlib.pyplot as plt' which is magically included in the line `%pylab inline` in the first code block

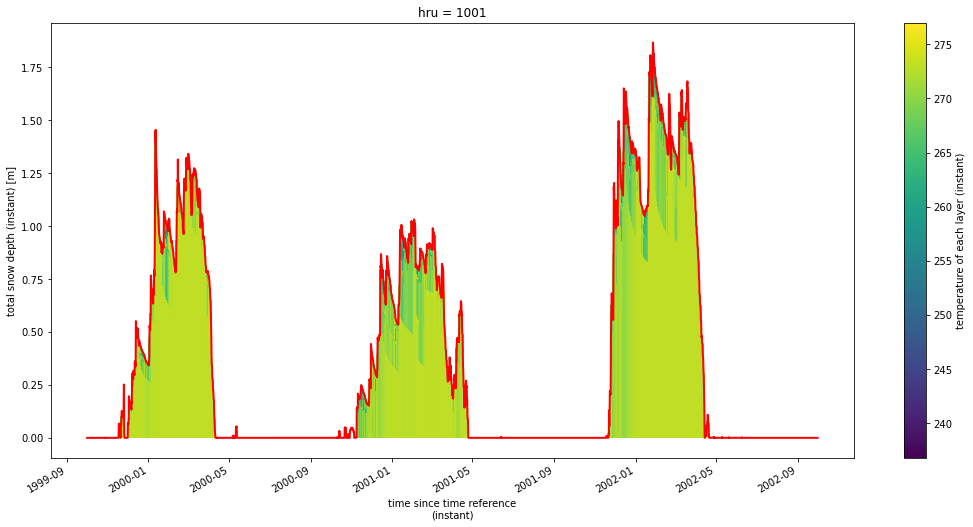

Additionally, pysumma provides some more specialized plotting capabilities. To access it we have the ps.plotting module. First, lets plot the vertical layers over time. For this we will use ps.plotting.layers, which requires two pieces of information. First, the variable that you want to plot. It should have both time and midToto dimensions. The first plot we will make will be the temperature, which uses the variable mLayerTemp, and the second will be the volumetric fraction

of water content in each layer, which uses mLayerVolFracWat. To start out we will give these more convenient names.

[15]:

depth = s.output.isel(hru=0)['iLayerHeight']

temp = s.output.isel(hru=0)['mLayerTemp']

frac_wat = s.output.isel(hru=0)['mLayerVolFracWat']

Now we can plot this using our function. For the temperature plot we will set plot_soil to False so that we only plot the snowpack. We can see that the top layers of the snowpack respond more quickly to the changing air temperature, and that later in the season the warmer air causes temperature transmission to lower layers and ultimately melts out.

[16]:

psp.layers(temp, depth, colormap='viridis', plot_soil=False, plot_snow=True);

s.output['scalarSnowDepth'].plot(color='red', linewidth=2);

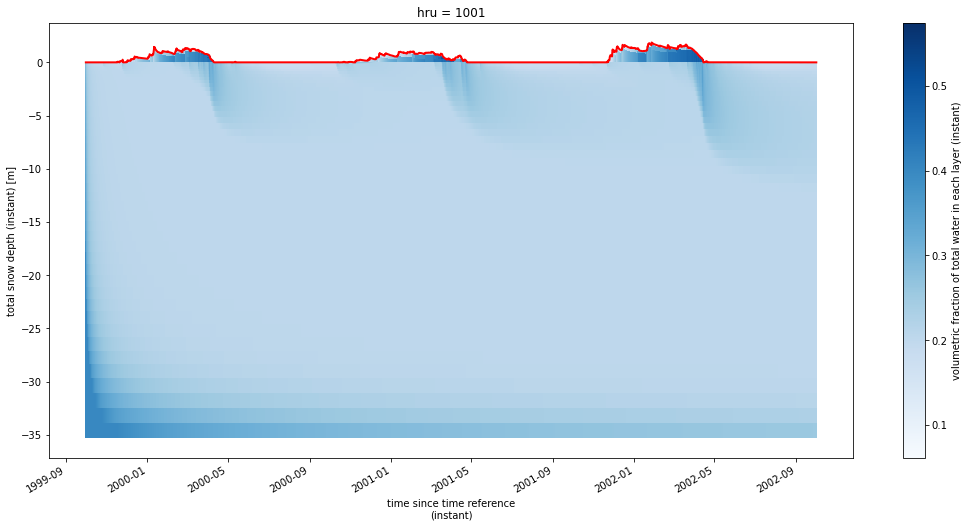

By looking at the volumetric water content we can see even more details. Now we will set plot_soil to True so that we can see how snowmelt can cause water infiltration into the soil. For example, during the melt season in 2012 we can easily see how the snowmelt infiltrates into the ground.

[17]:

psp.layers(frac_wat, depth, colormap='Blues', plot_soil=True, plot_snow=True);

s.output['scalarSnowDepth'].plot(color='red', linewidth=2);

[ ]:

Tutorial 2: Running ensembles of SUMMA simulations¶

pysumma offers an Ensemble class which is useful for running multiple simulations with varying options. These options can be parameter values, model structures, different locations made up of different file managers, or combinations of any of these. To demonstrate the Ensemble capabilities we will step through each individually. As usual we will begin with some imports and definition of some global variables.

[1]:

import numpy as np

import pysumma as ps

import matplotlib.pyplot as plt

from pprint import pprint

!cd data/reynolds && ./install_local_setup.sh && cd -

!cd data/coldeport && ./install_local_setup.sh && cd -

summa_exe = 'summa.exe'

file_manager = './data/coldeport/file_manager.txt'

/home/bzq/workspace/pysumma/tutorial

/home/bzq/workspace/pysumma/tutorial

Changing decisions¶

The Ensemble object mainly operates by taking configuration dictionaries. These can be defined manually, or can be defined through the use of helper functions which will be described later. For now, we will look at how to run models using different options for the stomResist and soilStress decisions. This is done by providing a dictionary of these mappings inside of a decisions key of the overall configuration. The decisions key is one of several special configuration keys

which pysumma knows how to manipulate under the hood. We will explore the others later.

This configuration is used to construct the Ensemble object, which also takes the SUMMA executable, a file manager path, and optionally the num_workers argument. The num_workers argument is used to automatically run these ensemble members in parallel. Here we define it as 2, since that’s how many different runs we will be completing. You can set this to a higher number than your computer has CPU cores, but you won’t likely see any additional speedup by doing so.

We then run the ensemble through the run method which works similarly to the Simulation object. After running the ensemble we check the status by running the summary method. This will return a dictionary outlining any successes or failures of each of the members. In the event of any failures you can later inspect the underlying Simulation objects that are internal to the Ensemble. We will demonstrate how to do this later in the tutorial.

[2]:

config = {

'run0': {'decisions':{'stomResist': 'Jarvis',

'soilStress': 'NoahType'}},

'run1': {'decisions':{'stomResist': 'BallBerry',

'soilStress': 'CLM_Type'}},

}

decision_ens = ps.Ensemble(summa_exe, config, file_manager, num_workers=2)

decision_ens.run('local')

print(decision_ens.summary())

{'Success': ['run0', 'run1'], 'Error': [], 'Other': []}

We also provide some functionality to make it easier to wrangle the data together via the merge_output method.

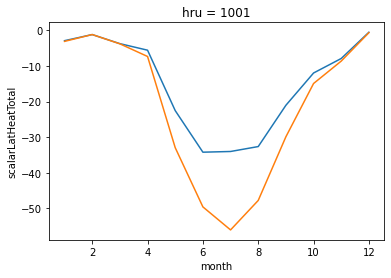

To access the simulations individually you can use the .simulations attribute, which is simply a dictionary of the Simulation objects mapped by the name that’s used to run the simulation. Let’s loop through and print the decisions directly from the Simulation as proof things worked. We can also open the output of each of the simulations as in the previous tutorial, and plot the monthly average latent heat flux for each simulation.

[3]:

for n, sim in decision_ens.simulations.items():

print(f'{n} used {sim.decisions["stomResist"].value} for the stomResist option and {sim.decisions["soilStress"].value} for soilStress')

run0 used Jarvis for the stomResist option and NoahType for soilStress

run1 used BallBerry for the stomResist option and CLM_Type for soilStress

[4]:

run0 = decision_ens.simulations['run0'].output.load()

run1 = decision_ens.simulations['run1'].output.load()

run0['scalarLatHeatTotal'].groupby(run0['time'].dt.month).mean().plot(label='run0')

run1['scalarLatHeatTotal'].groupby(run1['time'].dt.month).mean().plot(label='run1')

[4]:

[<matplotlib.lines.Line2D at 0x7f8f1a631790>]

Note in the previous example we didn’t run every combination of

stomResistandsoilStressthat we could have. When running multiple configurations it often becomes unwieldy to type out the full configuration that you are attempting to run, so some helper functions have been implemented to make this a bit easier. In the following cell we demonstrate this. You can see that the new output will show we have 4 configurations to run, each with a unique set of decisions. The names are just the

decisions being set, delimited by ++ so that it is easy to keep track of each run.

[5]:

decisions_to_run = {

'stomResist': ['Jarvis', 'BallBerry'],

'soilStress': ['NoahType', 'CLM_Type']

}

config = ps.ensemble.decision_product(decisions_to_run)

pprint(config)

{'++BallBerry++CLM_Type++': {'decisions': {'soilStress': 'CLM_Type',

'stomResist': 'BallBerry'}},

'++BallBerry++NoahType++': {'decisions': {'soilStress': 'NoahType',

'stomResist': 'BallBerry'}},

'++Jarvis++CLM_Type++': {'decisions': {'soilStress': 'CLM_Type',

'stomResist': 'Jarvis'}},

'++Jarvis++NoahType++': {'decisions': {'soilStress': 'NoahType',

'stomResist': 'Jarvis'}}}

[6]:

decision_ens = ps.Ensemble(summa_exe, config, file_manager, num_workers=4)

decision_ens.run('local')

print(decision_ens.summary())

{'Success': ['++Jarvis++NoahType++', '++Jarvis++CLM_Type++', '++BallBerry++NoahType++', '++BallBerry++CLM_Type++'], 'Error': [], 'Other': []}

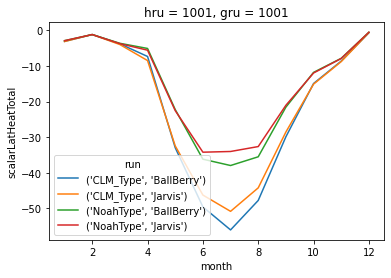

When ensembles have been run through these product configurations (we’ll detail a couple others later), you can use a special method to open them in a way that makes the output easier to wrangle. As before we’ll plot the mean monthly latent heat for each of the runs.

[7]:

ens_ds = decision_ens.merge_output()

ens_ds

[7]:

<xarray.Dataset>

Dimensions: (gru: 1, hru: 1, ifcSnow: 6, ifcToto: 15, midToto: 14, soilStress: 2, stomResist: 2, time: 52585)

Coordinates:

* time (time) datetime64[ns] 1994-10-01T00:59:59.99998659...

* hru (hru) int64 1001

* gru (gru) int64 1001

* stomResist (stomResist) object 'BallBerry' 'Jarvis'

* soilStress (soilStress) object 'CLM_Type' 'NoahType'

Dimensions without coordinates: ifcSnow, ifcToto, midToto

Data variables:

nSnow (time, hru, stomResist, soilStress) int32 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

scalarSnowDepth (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

scalarSWE (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

mLayerTemp (time, midToto, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

mLayerVolFracIce (time, midToto, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

mLayerVolFracLiq (time, midToto, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

mLayerVolFracWat (time, midToto, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

scalarSurfaceTemp (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

mLayerDepth (time, midToto, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

mLayerHeight (time, midToto, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

iLayerHeight (time, ifcToto, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 15, 1, 1, 2), meta=np.ndarray>

scalarSnowfallTemp (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

scalarSnowAge (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

scalarTotalSoilLiq (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

scalarTotalSoilIce (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

scalarRainfall (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

scalarSnowfall (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

scalarSenHeatTotal (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

scalarLatHeatTotal (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

scalarSnowSublimation (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

iLayerConductiveFlux (time, ifcToto, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 15, 1, 1, 2), meta=np.ndarray>

iLayerAdvectiveFlux (time, ifcToto, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 15, 1, 1, 2), meta=np.ndarray>

iLayerNrgFlux (time, ifcToto, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 15, 1, 1, 2), meta=np.ndarray>

mLayerNrgFlux (time, midToto, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

scalarSnowDrainage (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

iLayerLiqFluxSnow (time, ifcSnow, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 6, 1, 1, 2), meta=np.ndarray>

scalarInfiltration (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

scalarExfiltration (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

scalarSurfaceRunoff (time, hru, stomResist, soilStress) float64 dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

hruId (hru, stomResist, soilStress) int64 dask.array<chunksize=(1, 1, 2), meta=np.ndarray>

gruId (gru, stomResist, soilStress) int64 dask.array<chunksize=(1, 1, 2), meta=np.ndarray>

Attributes:

summaVersion: v3.0.3

buildTime: Tue Nov 10 23:53:25 UTC 2020

gitBranch: tags/v3.0.3-0-g4ee457d

gitHash: 4ee457df3d3c0779696c6388c67962ba76736df9

soilCatTbl: ROSETTA

vegeParTbl: MODIFIED_IGBP_MODIS_NOAH

soilStress: NoahType

stomResist: Jarvis

num_method: itertive

fDerivMeth: analytic

LAI_method: monTable

notPopulatedYet: notPopulatedYet

f_Richards: mixdform

groundwatr: qTopmodl

hc_profile: pow_prof

bcUpprTdyn: nrg_flux

bcLowrTdyn: zeroFlux

bcUpprSoiH: liq_flux

bcLowrSoiH: zeroFlux

veg_traits: CM_QJRMS1988

rootProfil: powerLaw

canopyEmis: difTrans

snowIncept: lightSnow

windPrfile: logBelowCanopy

astability: louisinv

canopySrad: BeersLaw

alb_method: conDecay

snowLayers: CLM_2010

compaction: anderson

thCondSnow: smnv2000

thCondSoil: funcSoilWet

spatial_gw: localColumn

subRouting: timeDlay

snowDenNew: constDens- gru: 1

- hru: 1

- ifcSnow: 6

- ifcToto: 15

- midToto: 14

- soilStress: 2

- stomResist: 2

- time: 52585

- time(time)datetime64[ns]1994-10-01T00:59:59.999986592 .....

- long_name :

- time since time reference (instant)

array(['1994-10-01T00:59:59.999986592', '1994-10-01T02:00:00.000013408', '1994-10-01T03:00:00.000000000', ..., '2000-09-29T23:00:00.000013440', '2000-09-30T00:00:00.000000000', '2000-09-30T00:59:59.999986560'], dtype='datetime64[ns]') - hru(hru)int641001

- long_name :

- hruId in the input file

- units :

- -

array([1001])

- gru(gru)int641001

- long_name :

- gruId in the input file

- units :

- -

array([1001])

- stomResist(stomResist)object'BallBerry' 'Jarvis'

array(['BallBerry', 'Jarvis'], dtype=object)

- soilStress(soilStress)object'CLM_Type' 'NoahType'

array(['CLM_Type', 'NoahType'], dtype=object)

- nSnow(time, hru, stomResist, soilStress)int32dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- number of snow layers (instant)

- units :

- -

Array Chunk Bytes 841.36 kB 420.68 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type int32 numpy.ndarray - scalarSnowDepth(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- total snow depth (instant)

- units :

- m

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarSWE(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- snow water equivalent (instant)

- units :

- kg m-2

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - mLayerTemp(time, midToto, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

- long_name :

- temperature of each layer (instant)

- units :

- K

Array Chunk Bytes 23.56 MB 11.78 MB Shape (52585, 14, 1, 2, 2) (52585, 14, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - mLayerVolFracIce(time, midToto, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

- long_name :

- volumetric fraction of ice in each layer (instant)

- units :

- -

Array Chunk Bytes 23.56 MB 11.78 MB Shape (52585, 14, 1, 2, 2) (52585, 14, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - mLayerVolFracLiq(time, midToto, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

- long_name :

- volumetric fraction of liquid water in each layer (instant)

- units :

- -

Array Chunk Bytes 23.56 MB 11.78 MB Shape (52585, 14, 1, 2, 2) (52585, 14, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - mLayerVolFracWat(time, midToto, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

- long_name :

- volumetric fraction of total water in each layer (instant)

- units :

- -

Array Chunk Bytes 23.56 MB 11.78 MB Shape (52585, 14, 1, 2, 2) (52585, 14, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarSurfaceTemp(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- surface temperature (just a copy of the upper-layer temperature) (instant)

- units :

- K

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - mLayerDepth(time, midToto, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

- long_name :

- depth of each layer (instant)

- units :

- m

Array Chunk Bytes 23.56 MB 11.78 MB Shape (52585, 14, 1, 2, 2) (52585, 14, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - mLayerHeight(time, midToto, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

- long_name :

- height of the layer mid-point (top of soil = 0) (instant)

- units :

- m

Array Chunk Bytes 23.56 MB 11.78 MB Shape (52585, 14, 1, 2, 2) (52585, 14, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - iLayerHeight(time, ifcToto, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 15, 1, 1, 2), meta=np.ndarray>

- long_name :

- height of the layer interface (top of soil = 0) (instant)

- units :

- m

Array Chunk Bytes 25.24 MB 12.62 MB Shape (52585, 15, 1, 2, 2) (52585, 15, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarSnowfallTemp(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- temperature of fresh snow (instant)

- units :

- K

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarSnowAge(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- non-dimensional snow age (instant)

- units :

- -

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarTotalSoilLiq(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- total mass of liquid water in the soil (instant)

- units :

- kg m-2

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarTotalSoilIce(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- total mass of ice in the soil (instant)

- units :

- kg m-2

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarRainfall(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- computed rainfall rate (instant)

- units :

- kg m-2 s-1

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarSnowfall(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- computed snowfall rate (instant)

- units :

- kg m-2 s-1

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarSenHeatTotal(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- sensible heat from the canopy air space to the atmosphere (instant)

- units :

- W m-2

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarLatHeatTotal(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- latent heat from the canopy air space to the atmosphere (instant)

- units :

- W m-2

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarSnowSublimation(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- snow sublimation/frost (below canopy or non-vegetated) (instant)

- units :

- kg m-2 s-1

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - iLayerConductiveFlux(time, ifcToto, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 15, 1, 1, 2), meta=np.ndarray>

- long_name :

- conductive energy flux at layer interfaces (instant)

- units :

- W m-2

Array Chunk Bytes 25.24 MB 12.62 MB Shape (52585, 15, 1, 2, 2) (52585, 15, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - iLayerAdvectiveFlux(time, ifcToto, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 15, 1, 1, 2), meta=np.ndarray>

- long_name :

- advective energy flux at layer interfaces (instant)

- units :

- W m-2

Array Chunk Bytes 25.24 MB 12.62 MB Shape (52585, 15, 1, 2, 2) (52585, 15, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - iLayerNrgFlux(time, ifcToto, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 15, 1, 1, 2), meta=np.ndarray>

- long_name :

- energy flux at layer interfaces (instant)

- units :

- W m-2

Array Chunk Bytes 25.24 MB 12.62 MB Shape (52585, 15, 1, 2, 2) (52585, 15, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - mLayerNrgFlux(time, midToto, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 14, 1, 1, 2), meta=np.ndarray>

- long_name :

- net energy flux for each layer within the snow+soil domain (instant)

- units :

- J m-3 s-1

Array Chunk Bytes 23.56 MB 11.78 MB Shape (52585, 14, 1, 2, 2) (52585, 14, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarSnowDrainage(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- drainage from the bottom of the snow profile (instant)

- units :

- m s-1

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - iLayerLiqFluxSnow(time, ifcSnow, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 6, 1, 1, 2), meta=np.ndarray>

- long_name :

- liquid flux at snow layer interfaces (instant)

- units :

- m s-1

Array Chunk Bytes 10.10 MB 5.05 MB Shape (52585, 6, 1, 2, 2) (52585, 6, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarInfiltration(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- infiltration of water into the soil profile (instant)

- units :

- m s-1

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarExfiltration(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- exfiltration of water from the top of the soil profile (instant)

- units :

- m s-1

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - scalarSurfaceRunoff(time, hru, stomResist, soilStress)float64dask.array<chunksize=(52585, 1, 1, 2), meta=np.ndarray>

- long_name :

- surface runoff (instant)

- units :

- m s-1

Array Chunk Bytes 1.68 MB 841.36 kB Shape (52585, 1, 2, 2) (52585, 1, 1, 2) Count 28 Tasks 2 Chunks Type float64 numpy.ndarray - hruId(hru, stomResist, soilStress)int64dask.array<chunksize=(1, 1, 2), meta=np.ndarray>

- long_name :

- ID defining the hydrologic response unit

- units :

- -

Array Chunk Bytes 32 B 16 B Shape (1, 2, 2) (1, 1, 2) Count 28 Tasks 2 Chunks Type int64 numpy.ndarray - gruId(gru, stomResist, soilStress)int64dask.array<chunksize=(1, 1, 2), meta=np.ndarray>

- long_name :

- ID defining the grouped (basin) response unit

- units :

- -

Array Chunk Bytes 32 B 16 B Shape (1, 2, 2) (1, 1, 2) Count 28 Tasks 2 Chunks Type int64 numpy.ndarray

- summaVersion :

- v3.0.3

- buildTime :

- Tue Nov 10 23:53:25 UTC 2020

- gitBranch :

- tags/v3.0.3-0-g4ee457d

- gitHash :

- 4ee457df3d3c0779696c6388c67962ba76736df9

- soilCatTbl :

- ROSETTA

- vegeParTbl :

- MODIFIED_IGBP_MODIS_NOAH

- soilStress :

- NoahType

- stomResist :

- Jarvis

- num_method :

- itertive

- fDerivMeth :

- analytic

- LAI_method :

- monTable

- notPopulatedYet :

- notPopulatedYet

- f_Richards :

- mixdform

- groundwatr :

- qTopmodl

- hc_profile :

- pow_prof

- bcUpprTdyn :

- nrg_flux

- bcLowrTdyn :

- zeroFlux

- bcUpprSoiH :

- liq_flux

- bcLowrSoiH :

- zeroFlux

- veg_traits :

- CM_QJRMS1988

- rootProfil :

- powerLaw

- canopyEmis :

- difTrans

- snowIncept :

- lightSnow

- windPrfile :

- logBelowCanopy

- astability :

- louisinv

- canopySrad :

- BeersLaw

- alb_method :

- conDecay

- snowLayers :

- CLM_2010

- compaction :

- anderson

- thCondSnow :

- smnv2000

- thCondSoil :

- funcSoilWet

- spatial_gw :

- localColumn

- subRouting :

- timeDlay

- snowDenNew :

- constDens

[8]:

stack = ens_ds.isel(hru=0, gru=0).stack(run=['soilStress', 'stomResist'])

stack['scalarLatHeatTotal'].groupby(stack['time'].dt.month).mean(dim='time').plot.line(x='month')

[8]:

[<matplotlib.lines.Line2D at 0x7f8f19713710>,

<matplotlib.lines.Line2D at 0x7f8f19874ad0>,

<matplotlib.lines.Line2D at 0x7f8f19713350>,

<matplotlib.lines.Line2D at 0x7f8f197137d0>]

Changing parameters¶

Similarly, you can change parameter values to do sensitivity experiments:

[17]:

config = {

'lower_param': {'trial_params': {'windReductionParam': 0.1,

'canopyWettingFactor': 0.1}},

'upper_param': {'trial_params': {'windReductionParam': 0.9,

'canopyWettingFactor': 0.9}}

}

param_ens = ps.Ensemble(summa_exe, config, file_manager, num_workers=2)

param_ens.run('local')

print(param_ens.summary())

{'Success': ['lower_param', 'upper_param'], 'Error': [], 'Other': []}

As you can tell, it can quickly become tiresome to type out every parameter value you want to set. To that end we also have a helper function for setting up these parameter sensitivity experiments. Now you can see that we will end up with 4 configurations, as before. We won’t run this because it may take longer than is instructional. But, you can modify this notebook if you wish to see the effect each of these have.

[18]:

parameters_to_run = {

'windReductionParam': [0.1, 0.9],

'canopyWettingFactor': [0.1, 0.9]

}

config = ps.ensemble.trial_parameter_product(parameters_to_run)

pprint(config)

{'++windReductionParam=0.1++canopyWettingFactor=0.1++': {'trial_parameters': {'canopyWettingFactor': 0.1,

'windReductionParam': 0.1}},

'++windReductionParam=0.1++canopyWettingFactor=0.9++': {'trial_parameters': {'canopyWettingFactor': 0.9,

'windReductionParam': 0.1}},

'++windReductionParam=0.9++canopyWettingFactor=0.1++': {'trial_parameters': {'canopyWettingFactor': 0.1,

'windReductionParam': 0.9}},

'++windReductionParam=0.9++canopyWettingFactor=0.9++': {'trial_parameters': {'canopyWettingFactor': 0.9,

'windReductionParam': 0.9}}}

Running multiple sites via file managers¶

As you may have guessed, you can also define an Ensemble by providing a list of file managers. This is useful for running multiple sites which can’t be collected into a multi HRU run because they have different simulation times, are disjointly located, or for any other reason. It is important to note that in this case we don’t provide the Ensemble constructor a file_manager argument, as it is now provided in the configuration.

[20]:

config = {

'coldeport': {'file_manager': './data/coldeport/file_manager.txt'},

'reynolds' : {'file_manager': './data/reynolds/file_manager.txt'},

}

manager_ens = ps.Ensemble(summa_exe, config, num_workers=2)

manager_ens.run('local')

print(manager_ens.summary())

distributed.scheduler - ERROR - Couldn't gather keys {'_submit-261287ecf520a19948af16af51a4eede': []} state: ['processing'] workers: []

NoneType: None

distributed.scheduler - ERROR - Workers don't have promised key: [], _submit-261287ecf520a19948af16af51a4eede

NoneType: None

distributed.client - WARNING - Couldn't gather 1 keys, rescheduling {'_submit-261287ecf520a19948af16af51a4eede': ()}

{'Success': ['coldeport', 'reynolds'], 'Error': [], 'Other': []}

[21]:

managers_to_run = {

'file_manager': ['./data/coldeport/file_manager.txt', './data/coldeport/file_manager.txt']

}

config = ps.ensemble.file_manager_product(managers_to_run)

pprint(config)

{'++file_manager=./data/coldeport/file_manager.txt++': {'file_manager': {'file_manager': './data/coldeport/file_manager.txt'}}}

Combining ensemble types¶

Each of these abilities are useful in their own right, but the ability to combine them into greater ensembles provides a very flexible way to explore multiple hypotheses. To this end we also provide a helper function which can facilitate running these larger experiments. We won’t print out the entire configuration here, since it’s quite long. Instead we show that this would result in 32 SUMMA simulations. For that reason we also won’t run this experment by default, though you can if you wish to.

[22]:

config = ps.ensemble.total_product(dec_conf=decisions_to_run,

param_trial_conf=parameters_to_run,

fman_conf=managers_to_run)

print(len(config))

16

For illustrative purpose we show the first key of this configuration

[23]:

print(list(config.keys())[0])

++Jarvis++NoahType++windReductionParam=0.1++canopyWettingFactor=0.1++._data_coldeport_file_manager.txt++

As you can see, the keys (or names of the runs) grows when you include more options. This can be a problem for some operating systems/filesystems, along with being very hard to read. So, we also have a flag here that makes things more compact

[24]:

config = ps.ensemble.total_product(dec_conf=decisions_to_run,

param_trial_conf=parameters_to_run,

fman_conf=managers_to_run,

sequential_keys=True

)

print(list(config.keys())[0])

run_0

[ ]:

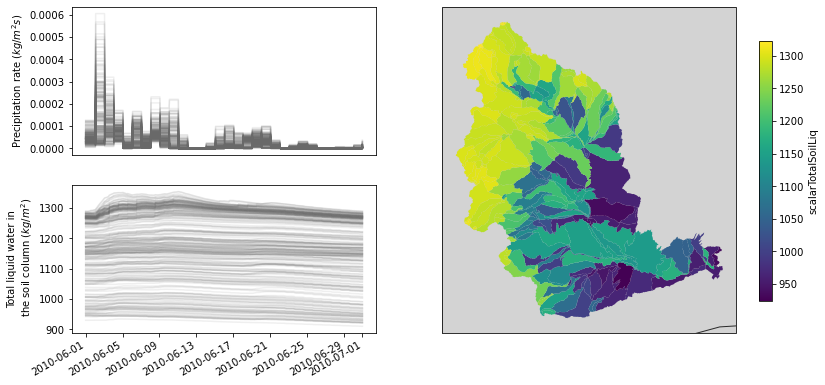

Tutorial 3: Running spatially distributed simulations¶

While the Ensemble class discussed in the previous tutorial can be used to run multiple locations via different file managers, this is generally not the way that a spatially-distributed SUMMA simulation would be run. For spatially-distributed run pysumma has a Distributed class, which makes running these types of simulations easier. For this example we will run a simulation of the Yakima River Basin in the Pacific Northwestern United States. As ususal, we start with some standard imports

and get the model setup installed for local use.

[1]:

import xarray as xr

import pysumma as ps

import geopandas as gpd

import cartopy.crs as ccrs

import pysumma.plotting as psp

import matplotlib.pyplot as plt

!cd data/yakima && ./install_local_setup.sh && cd -

/home/bzq/workspace/pysumma/tutorial

Running a basic Distributed simulation¶

Before getting into any of the further customization that can be done with a Distributed object let’s just run through the basic usage. If you are running this tutorial interactively, you are probably on a small binder instance or other machine that has a small amount of compute capacity. It’s important to point out that in this tutorial we will only run small examples, the parallelism approach of the Distributed object can easily scale out to hundreds of cores. As with the

Simulation and Ensemble objects, a Distributed object must be given a some information, namely the SUMMA executable and a file manager, to be instantiated. Additionally we will set 4 workers, which means that 4 SUMMA simulations will be run in parallel, and the num_chunks to 8. num_chunks describes how many simulations will be run in total. In this configuration each worker will run two SUMMA simulations. We can inspect these chunks directly by inspecting the underlying keys

for the Simulations in the Distributed instance, via yakima.simulations.keys(). Doing so we see that there are 8 simulations, each with 36 GRU (denoted by gX-X+35). As an alternative to the num_chunks argument, you can also use the chunk_size argument to specify how many GRU to run in each chunk.

[2]:

summa_exe = 'summa.exe'

file_manager = './data/yakima/file_manager.txt'

yakima = ps.Distributed(summa_exe, file_manager, num_workers=4, num_chunks=8)

yakima.simulations.keys()

/home/bzq/miniconda3/envs/all/lib/python3.7/site-packages/distributed/node.py:155: UserWarning: Port 8787 is already in use.

Perhaps you already have a cluster running?

Hosting the HTTP server on port 43425 instead

http_address["port"], self.http_server.port

[2]:

dict_keys(['g1-36', 'g37-72', 'g73-108', 'g109-144', 'g145-180', 'g181-216', 'g217-252', 'g253-285'])

As with the Simulation and Ensemble objects we can simply call .run() to start the simulations running. If you are running this tutorial interactively, this may take a moment because of the limited compute capacity of the binder instance. We have included the %%time magic to time how long this cell takes to run locally. You might expect this to take up to roughly two times the amount of time on the binder instance than is reported in the documentation at

pysumma.readthedocs.io. As with the Ensemble we can use the .summary() method once the runs are complete to ensure that they all exit with status Success.

[3]:

%%time

yakima.run()

print(yakima.summary())

{'Success': ['g1-36', 'g37-72', 'g73-108', 'g109-144', 'g145-180', 'g181-216', 'g217-252', 'g253-285'], 'Error': [], 'Other': []}

CPU times: user 10.6 s, sys: 1.93 s, total: 12.5 s

Wall time: 1min 4s

Once you’ve gotten a successful set of simulations, it’s time to look at the output! This can be done with the merge_output() method, which will collate all of the different runs together into a single xr.Dataset. If you want to open each of the output files individually without merging them you can sue the open_output() method. Once we’ve merged everything together, we see that there are 285 GRU (and similarly here, 285 HRU).

[4]:

yakima_ds = yakima.merge_output()

yakima_ds

[4]:

<xarray.Dataset>

Dimensions: (gru: 285, hru: 285, ifcToto: 14, midToto: 13, time: 721)

Coordinates:

* hru (hru) int64 17006965 17006967 ... 17009664

* time (time) datetime64[ns] 2010-06-01 ... 2010...

* gru (gru) int64 906922 906924 ... 909581 909583

Dimensions without coordinates: ifcToto, midToto

Data variables:

pptrate (time, hru) float64 7.562e-05 ... 1.418e-05

airtemp (time, hru) float64 279.2 279.1 ... 278.0

windspd_mean (time, hru) float64 0.8894 0.8894 ... 1.266

SWRadAtm (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

LWRadAtm (time, hru) float64 287.4 287.1 ... 288.3

scalarCanopyIce (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

scalarCanopyLiq (time, hru) float64 0.2849 ... 0.07626

scalarCanopyWat_mean (time, hru) float64 0.2849 ... 0.07626

scalarCanairTemp (time, hru) float64 275.7 275.6 ... 275.8

scalarCanopyTemp (time, hru) float64 275.6 275.5 ... 275.6

scalarSnowAlbedo (time, hru) float64 -9.999e+03 ... -9.999...

scalarSnowDepth_mean (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

scalarSWE (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

mLayerTemp_mean (time, midToto, hru) float64 281.0 ... -9...

scalarSurfaceTemp (time, hru) float64 281.0 281.0 ... 279.2

mLayerDepth_mean (time, midToto, hru) float64 0.025 ... -9...

mLayerHeight_mean (time, midToto, hru) float64 0.0125 ... -...

iLayerHeight_mean (time, ifcToto, hru) float64 -0.0 ... -9....

scalarTotalSoilLiq (time, hru) float64 1.169e+03 ... 1.273e+03

scalarTotalSoilIce (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

scalarCanairNetNrgFlux (time, hru) float64 -5.337 -5.354 ... -8.861

scalarCanopyNetNrgFlux (time, hru) float64 -7.165 -7.193 ... -11.87

scalarGroundNetNrgFlux (time, hru) float64 -27.66 -27.68 ... -19.02

scalarLWNetUbound (time, hru) float64 -40.71 -40.77 ... -39.55

scalarSenHeatTotal (time, hru) float64 0.4441 0.4447 ... 3.267

scalarLatHeatTotal (time, hru) float64 -0.2061 ... -3.392

scalarLatHeatCanopyEvap (time, hru) float64 -0.1114 ... -2.079

scalarLatHeatCanopyTrans (time, hru) float64 -0.01746 ... -1.063

scalarLatHeatGround (time, hru) float64 -0.07726 ... -0.2504

scalarCanopySublimation (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

scalarSnowSublimation (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

scalarCanopyTranspiration (time, hru) float64 -6.98e-09 ... -4.248e-07

scalarCanopyEvaporation (time, hru) float64 -4.454e-08 ... -8.311...

scalarGroundEvaporation (time, hru) float64 -3.089e-08 ... -1.001...

scalarThroughfallSnow (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

scalarThroughfallRain (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

scalarCanopySnowUnloading_mean (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

scalarRainPlusMelt (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

scalarInfiltration (time, hru) float64 2.479e-14 ... 0.0

scalarExfiltration (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

scalarSurfaceRunoff (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

scalarSoilBaseflow (time, hru) float64 0.0 0.0 0.0 ... 0.0 0.0

scalarAquiferBaseflow (time, hru) float64 2.228e-11 ... 4.876e-09

scalarTotalRunoff (time, hru) float64 2.228e-11 ... 4.876e-09

scalarNetRadiation (time, hru) float64 -40.71 -40.77 ... -39.55

hruId (hru) int64 17006965 17006967 ... 17009664

averageInstantRunoff (time, gru) float64 4.448e-11 ... 9.744e-09

averageRoutedRunoff (time, gru) float64 4.459e-11 ... 9.92e-09

gruId (gru) int64 906922 906924 ... 909581 909583- gru: 285

- hru: 285

- ifcToto: 14

- midToto: 13

- time: 721

- hru(hru)int6417006965 17006967 ... 17009664

- long_name :

- hruId in the input file

- units :

- -

array([17006965, 17006967, 17006969, ..., 17009604, 17009662, 17009664])

- time(time)datetime64[ns]2010-06-01 ... 2010-07-01

- long_name :

- time since time reference (instant)

array(['2010-06-01T00:00:00.000000000', '2010-06-01T00:59:59.999986688', '2010-06-01T02:00:00.000013312', ..., '2010-06-30T21:59:59.999986688', '2010-06-30T23:00:00.000013312', '2010-07-01T00:00:00.000000000'], dtype='datetime64[ns]') - gru(gru)int64906922 906924 ... 909581 909583

- long_name :

- gruId in the input file

- units :

- -

array([906922, 906924, 906926, ..., 909523, 909581, 909583])

- pptrate(time, hru)float647.562e-05 7.606e-05 ... 1.418e-05

- long_name :

- precipitation rate (instant)

- units :

- kg m-2 s-1

array([[7.56163136e-05, 7.60601688e-05, 5.15421052e-05, ..., 5.12004772e-05, 7.64069482e-05, 6.71296293e-05], [7.56163136e-05, 7.60601688e-05, 5.15421052e-05, ..., 5.12004772e-05, 7.64069482e-05, 6.71296293e-05], [7.56163136e-05, 7.60601688e-05, 5.15421052e-05, ..., 5.12004772e-05, 7.64069482e-05, 6.71296293e-05], ..., [0.00000000e+00, 0.00000000e+00, 7.92784340e-06, ..., 2.89351846e-07, 9.61138994e-07, 8.68055565e-07], [0.00000000e+00, 0.00000000e+00, 7.92784340e-06, ..., 2.89351846e-07, 9.61138994e-07, 8.68055565e-07], [2.52938185e-06, 2.56903422e-06, 1.60732616e-05, ..., 1.76133108e-05, 1.61329936e-05, 1.41782411e-05]]) - airtemp(time, hru)float64279.2 279.1 287.9 ... 277.8 278.0

- long_name :

- air temperature at the measurement height (instant)

- units :

- K

array([[279.16464233, 279.10180664, 287.88330078, ..., 279.55426025, 277.30725098, 277.47616577], [277.83862305, 277.77490234, 286.64276123, ..., 278.72442627, 276.37030029, 276.52346802], [276.68637085, 276.62182617, 285.56466675, ..., 278.00335693, 275.55612183, 275.69558716], ..., [282.53366089, 282.48223877, 289.32037354, ..., 281.45050049, 279.69995117, 279.85458374], [280.41363525, 280.36248779, 287.4588623 , ..., 280.25997925, 278.4954834 , 278.64541626], [280.35980225, 280.31335449, 288.38198853, ..., 279.47991943, 277.83065796, 277.98855591]]) - windspd_mean(time, hru)float640.8894 0.8894 1.166 ... 1.265 1.266

- long_name :

- wind speed at the measurement height (mean)

- units :

- m s-1

array([[0.88942987, 0.8894136 , 1.16556144, ..., 1.46989083, 1.52078903, 1.52110004], [0.88942987, 0.8894136 , 1.16556144, ..., 1.46989083, 1.52078903, 1.52110004], [0.88942987, 0.8894136 , 1.16556144, ..., 1.46989083, 1.52078903, 1.52110004], ..., [2.54002619, 2.53933144, 2.87046552, ..., 2.1676178 , 2.15637445, 2.1572001 ], [2.54002619, 2.53933144, 2.87046552, ..., 2.1676178 , 2.15637445, 2.1572001 ], [1.60224426, 1.60180855, 1.77113569, ..., 1.29701996, 1.2652241 , 1.26619995]]) - SWRadAtm(time, hru)float640.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

- long_name :

- downward shortwave radiation at the upper boundary (instant)

- units :

- W m-2

array([[0., 0., 0., ..., 0., 0., 0.], [0., 0., 0., ..., 0., 0., 0.], [0., 0., 0., ..., 0., 0., 0.], ..., [0., 0., 0., ..., 0., 0., 0.], [0., 0., 0., ..., 0., 0., 0.], [0., 0., 0., ..., 0., 0., 0.]]) - LWRadAtm(time, hru)float64287.4 287.1 333.5 ... 287.8 288.3

- long_name :

- downward longwave radiation at the upper boundary (instant)

- units :

- W m-2

array([[287.40258789, 287.07562256, 333.48782349, ..., 296.21936035, 282.74954224, 283.06121826], [282.04776001, 281.72177124, 327.86801147, ..., 292.76242065, 278.99124146, 279.23876953], [277.45541382, 277.13037109, 323.04211426, ..., 289.78302002, 275.75579834, 275.94857788], ..., [267.30368042, 267.06115723, 329.26171875, ..., 296.42745972, 288.24688721, 288.90286255], [259.50274658, 259.26660156, 321.00296021, ..., 291.51055908, 283.37393188, 284.00302124], [281.47229004, 281.27328491, 319.14331055, ..., 297.82312012, 287.81790161, 288.33462524]]) - scalarCanopyIce(time, hru)float640.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

- long_name :

- mass of ice on the vegetation canopy (instant)

- units :

- kg m-2

array([[0. , 0. , 0. , ..., 0. , 0. , 0. ], [0. , 0. , 0. , ..., 0. , 0. , 0. ], [0. , 0. , 0. , ..., 0. , 0.02319168, 0.00730556], ..., [0. , 0. , 0. , ..., 0. , 0. , 0. ], [0. , 0. , 0. , ..., 0. , 0. , 0. ], [0. , 0. , 0. , ..., 0. , 0. , 0. ]]) - scalarCanopyLiq(time, hru)float640.2849 0.2866 ... 0.08693 0.07626

- long_name :

- mass of liquid water on the vegetation canopy (instant)

- units :

- kg m-2

array([[0.2848862 , 0.28661225, 0.35087317, ..., 0.25528067, 0.3613199 , 0.31721344], [0.55707802, 0.56040242, 0.54042229, ..., 0.44082329, 0.63726835, 0.55965798], [0.82944329, 0.83436647, 0.73040318, ..., 0.62767783, 0.89150277, 0.79635616], ..., [0.00523263, 0.00523645, 0.07405508, ..., 0.01060338, 0.03065529, 0.0269997 ], [0.00522588, 0.00522969, 0.09879395, ..., 0.01104933, 0.03200753, 0.02820905], [0.01282898, 0.01295744, 0.15836067, ..., 0.07099386, 0.08693351, 0.07625861]]) - scalarCanopyWat_mean(time, hru)float640.2849 0.2866 ... 0.08693 0.07626

- long_name :

- mass of total water on the vegetation canopy (mean)

- units :

- kg m-2

array([[0.2848862 , 0.28661225, 0.35087317, ..., 0.25528067, 0.3613199 , 0.31721344], [0.55707802, 0.56040242, 0.54042229, ..., 0.44082329, 0.63726835, 0.55965798], [0.82944329, 0.83436647, 0.73040318, ..., 0.62767783, 0.91469445, 0.80366172], ..., [0.00523263, 0.00523645, 0.07405508, ..., 0.01060338, 0.03065529, 0.0269997 ], [0.00522588, 0.00522969, 0.09879395, ..., 0.01104933, 0.03200753, 0.02820905], [0.01282898, 0.01295744, 0.15836067, ..., 0.07099386, 0.08693351, 0.07625861]]) - scalarCanairTemp(time, hru)float64275.7 275.6 282.1 ... 275.6 275.8

- long_name :

- temperature of the canopy air space (instant)

- units :

- K

array([[275.67493913, 275.61897099, 282.13812679, ..., 276.5496316 , 274.51310888, 274.63401125], [274.6583324 , 274.59992024, 281.88236478, ..., 275.86111914, 273.72431877, 273.82292771], [273.79851508, 273.73867956, 281.44635536, ..., 275.33920245, 273.25997274, 273.26301317], ..., [281.52671199, 281.47458662, 285.01133945, ..., 280.74910573, 278.95596146, 279.11499477], [279.53404011, 279.48192071, 283.28165643, ..., 279.5529211 , 277.74530593, 277.901004 ], [276.95616383, 276.90417864, 281.9011963 , ..., 277.4979156 , 275.61958526, 275.7662665 ]]) - scalarCanopyTemp(time, hru)float64275.6 275.5 282.1 ... 275.5 275.6

- long_name :

- temperature of the vegetation canopy (instant)

- units :

- K

array([[275.5898463 , 275.53362052, 282.10450556, ..., 276.44422027, 274.38967576, 274.51008951], [274.58872865, 274.53015735, 281.84347191, ..., 275.76600997, 273.61315019, 273.71158479], [273.7375521 , 273.67761803, 281.40141704, ..., 275.24874308, 273.15677422, 273.15808441], ..., [280.99633119, 280.94439876, 284.94327306, ..., 280.39597057, 278.60141797, 278.7604709 ], [279.03835098, 278.98636991, 283.20519399, ..., 279.21507167, 277.40745324, 277.56311727], [276.76309563, 276.71128983, 281.87593833, ..., 277.34612592, 275.47096086, 275.61721434]]) - scalarSnowAlbedo(time, hru)float64-9.999e+03 ... -9.999e+03

- long_name :

- snow albedo for the entire spectral band (instant)

- units :

- -

array([[-9999., -9999., -9999., ..., -9999., -9999., -9999.], [-9999., -9999., -9999., ..., -9999., -9999., -9999.], [-9999., -9999., -9999., ..., -9999., -9999., -9999.], ..., [-9999., -9999., -9999., ..., -9999., -9999., -9999.], [-9999., -9999., -9999., ..., -9999., -9999., -9999.], [-9999., -9999., -9999., ..., -9999., -9999., -9999.]]) - scalarSnowDepth_mean(time, hru)float640.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

- long_name :

- total snow depth (mean)

- units :

- m

array([[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ..., 0.00000000e+00, 0.00000000e+00, 0.00000000e+00], [0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ..., 0.00000000e+00, 0.00000000e+00, 0.00000000e+00], [0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ..., 0.00000000e+00, 0.00000000e+00, 0.00000000e+00], ..., [0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ..., 0.00000000e+00, 0.00000000e+00, 0.00000000e+00], [0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ..., 0.00000000e+00, 0.00000000e+00, 0.00000000e+00], [5.95065803e-10, 6.71683864e-10, 0.00000000e+00, ..., 0.00000000e+00, 0.00000000e+00, 0.00000000e+00]]) - scalarSWE(time, hru)float640.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

- long_name :

- snow water equivalent (instant)

- units :

- kg m-2

array([[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ..., 0.00000000e+00, 0.00000000e+00, 0.00000000e+00], [0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ..., 0.00000000e+00, 0.00000000e+00, 0.00000000e+00], [0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ..., 0.00000000e+00, 0.00000000e+00, 0.00000000e+00], ..., [0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ..., 0.00000000e+00, 0.00000000e+00, 0.00000000e+00], [0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ..., 0.00000000e+00, 0.00000000e+00, 0.00000000e+00], [8.92598704e-08, 1.00752580e-07, 0.00000000e+00, ..., 0.00000000e+00, 0.00000000e+00, 0.00000000e+00]]) - mLayerTemp_mean(time, midToto, hru)float64281.0 281.0 ... -9.999e+03

- long_name :

- temperature of each layer (mean)

- units :

- K

array([[[ 281.01348304, 280.96274488, 286.23043799, ..., 280.43873073, 278.69752846, 278.84283714], [ 281.73704322, 281.68621174, 287.23176891, ..., 280.96916059, 279.25721178, 279.40621677], [ 281.87887428, 281.82764699, 287.87448249, ..., 281.11595109, 279.42198287, 279.57182636], ..., [-9999. , -9999. , -9999. , ..., -9999. , -9999. , -9999. ], [-9999. , -9999. , -9999. , ..., -9999. , -9999. , -9999. ], [-9999. , -9999. , -9999. , ..., -9999. , -9999. , -9999. ]], [[ 280.54024602, 280.48915431, 285.81529782, ..., 280.09974108, 278.33034657, 278.4705361 ], [ 281.36612715, 281.31522321, 286.8483686 , ..., 280.7094432 , 278.98149345, 279.12766476], [ 281.78925887, 281.73810036, 287.74284 , ..., 281.05507782, 279.35631722, 279.50560905], ... [-9999. , -9999. , -9999. , ..., -9999. , -9999. , -9999. ], [-9999. , -9999. , -9999. , ..., -9999. , -9999. , -9999. ], [-9999. , -9999. , -9999. , ..., -9999. , -9999. , -9999. ]], [[ 281.50267905, 281.45215995, 286.81199433, ..., 280.80265241, 279.08511166, 279.23546735], [ 282.10756768, 282.0563797 , 287.8339394 , ..., 281.21854807, 279.51019407, 279.66207391], [ 281.92149854, 281.86973562, 288.18195298, ..., 281.13592528, 279.43489511, 279.58600375], ..., [-9999. , -9999. , -9999. , ..., -9999. , -9999. , -9999. ], [-9999. , -9999. , -9999. , ..., -9999. , -9999. , -9999. ], [-9999. , -9999. , -9999. , ..., -9999. , -9999. , -9999. ]]]) - scalarSurfaceTemp(time, hru)float64281.0 281.0 286.2 ... 279.1 279.2

- long_name :

- surface temperature (just a copy of the upper-layer temperature) (instant)

- units :

- K

array([[281.01348304, 280.96274488, 286.23043799, ..., 280.43873073, 278.69752846, 278.84283714], [280.54024602, 280.48915431, 285.81529782, ..., 280.09974108, 278.33034657, 278.4705361 ], [280.09935275, 280.04798328, 285.40362561, ..., 279.79539676, 278.01405943, 278.1405834 ], ..., [282.78789386, 282.73631115, 288.34826687, ..., 281.63185555, 279.92142551, 280.07575016], [282.09769348, 282.04672115, 287.39572212, ..., 281.23163826, 279.52051871, 279.67307917], [281.50267905, 281.45215995, 286.81199433, ..., 280.80265241, 279.08511166, 279.23546735]]) - mLayerDepth_mean(time, midToto, hru)float640.025 0.025 ... -9.999e+03

- long_name :

- depth of each layer (mean)

- units :

- m

array([[[ 2.500e-02, 2.500e-02, 2.500e-02, ..., 2.500e-02, 2.500e-02, 2.500e-02], [ 7.500e-02, 7.500e-02, 7.500e-02, ..., 7.500e-02, 7.500e-02, 7.500e-02], [ 1.500e-01, 1.500e-01, 1.500e-01, ..., 1.500e-01, 1.500e-01, 1.500e-01], ..., [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03]], [[ 2.500e-02, 2.500e-02, 2.500e-02, ..., 2.500e-02, 2.500e-02, 2.500e-02], [ 7.500e-02, 7.500e-02, 7.500e-02, ..., 7.500e-02, 7.500e-02, 7.500e-02], [ 1.500e-01, 1.500e-01, 1.500e-01, ..., 1.500e-01, 1.500e-01, 1.500e-01], ... [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03]], [[ 2.500e-02, 2.500e-02, 2.500e-02, ..., 2.500e-02, 2.500e-02, 2.500e-02], [ 7.500e-02, 7.500e-02, 7.500e-02, ..., 7.500e-02, 7.500e-02, 7.500e-02], [ 1.500e-01, 1.500e-01, 1.500e-01, ..., 1.500e-01, 1.500e-01, 1.500e-01], ..., [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03]]]) - mLayerHeight_mean(time, midToto, hru)float640.0125 0.0125 ... -9.999e+03

- long_name :

- height of the layer mid-point (top of soil = 0) (mean)

- units :

- m

array([[[ 1.250e-02, 1.250e-02, 1.250e-02, ..., 1.250e-02, 1.250e-02, 1.250e-02], [ 6.250e-02, 6.250e-02, 6.250e-02, ..., 6.250e-02, 6.250e-02, 6.250e-02], [ 1.750e-01, 1.750e-01, 1.750e-01, ..., 1.750e-01, 1.750e-01, 1.750e-01], ..., [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03]], [[ 1.250e-02, 1.250e-02, 1.250e-02, ..., 1.250e-02, 1.250e-02, 1.250e-02], [ 6.250e-02, 6.250e-02, 6.250e-02, ..., 6.250e-02, 6.250e-02, 6.250e-02], [ 1.750e-01, 1.750e-01, 1.750e-01, ..., 1.750e-01, 1.750e-01, 1.750e-01], ... [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03]], [[ 1.250e-02, 1.250e-02, 1.250e-02, ..., 1.250e-02, 1.250e-02, 1.250e-02], [ 6.250e-02, 6.250e-02, 6.250e-02, ..., 6.250e-02, 6.250e-02, 6.250e-02], [ 1.750e-01, 1.750e-01, 1.750e-01, ..., 1.750e-01, 1.750e-01, 1.750e-01], ..., [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03]]]) - iLayerHeight_mean(time, ifcToto, hru)float64-0.0 -0.0 ... -9.999e+03 -9.999e+03

- long_name :

- height of the layer interface (top of soil = 0) (mean)

- units :

- m

array([[[-0.000e+00, -0.000e+00, -0.000e+00, ..., -0.000e+00, -0.000e+00, -0.000e+00], [ 2.500e-02, 2.500e-02, 2.500e-02, ..., 2.500e-02, 2.500e-02, 2.500e-02], [ 1.000e-01, 1.000e-01, 1.000e-01, ..., 1.000e-01, 1.000e-01, 1.000e-01], ..., [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03]], [[-0.000e+00, -0.000e+00, -0.000e+00, ..., -0.000e+00, -0.000e+00, -0.000e+00], [ 2.500e-02, 2.500e-02, 2.500e-02, ..., 2.500e-02, 2.500e-02, 2.500e-02], [ 1.000e-01, 1.000e-01, 1.000e-01, ..., 1.000e-01, 1.000e-01, 1.000e-01], ... [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03]], [[-0.000e+00, -0.000e+00, -0.000e+00, ..., -0.000e+00, -0.000e+00, -0.000e+00], [ 2.500e-02, 2.500e-02, 2.500e-02, ..., 2.500e-02, 2.500e-02, 2.500e-02], [ 1.000e-01, 1.000e-01, 1.000e-01, ..., 1.000e-01, 1.000e-01, 1.000e-01], ..., [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03], [-9.999e+03, -9.999e+03, -9.999e+03, ..., -9.999e+03, -9.999e+03, -9.999e+03]]]) - scalarTotalSoilLiq(time, hru)float641.169e+03 1.172e+03 ... 1.273e+03

- long_name :

- total mass of liquid water in the soil (instant)

- units :

- kg m-2

array([[1169.4861494 , 1171.95923543, 958.16016633, ..., 1273.18576626, 1275.53986186, 1272.82216379], [1169.48593486, 1171.95901743, 958.15606966, ..., 1273.16738024, 1275.51940637, 1272.80433547], [1169.48570129, 1171.95878033, 958.15155395, ..., 1273.14902732, 1275.4989666 , 1272.78651745], ..., [1160.41594809, 1163.19740491, 934.31440308, ..., 1273.25450403, 1275.61803344, 1272.8950666 ], [1160.40710502, 1163.18858504, 934.30586475, ..., 1273.23245505, 1275.5942375 , 1272.87383523], [1160.40161177, 1163.18311216, 934.30247678, ..., 1273.21199869, 1275.57230158, 1272.85442138]]) - scalarTotalSoilIce(time, hru)float640.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0

- long_name :

- total mass of ice in the soil (instant)

- units :

- kg m-2